ChatGPT, launched by OpenAI in late November 2022, is the new talk of the town.

Note: I started writing this piece in early January 2023 and, as you will see, things are moving really fast in the generative AI field.

Everyone’s raving about its user-friendliness and the mind blowing variety of its skills: it can both generate a fiction piece out of thin air and a functional Python script. We’ve seen people using it to write cover letters, personal statements, school essays and political speeches. I even wrote a song called Crypto Winter.

Two weeks ago, I subscribed to a Google Alert for ChatGPT and it’s one of the longest notification emails I receive from the service every morning. Everyone, from writers to lawyers, real estate agents, students, teachers, developers and even politicians seems to be talking about ChatGPT. Dozens of startups are raising huge rounds around generative AI offerings.

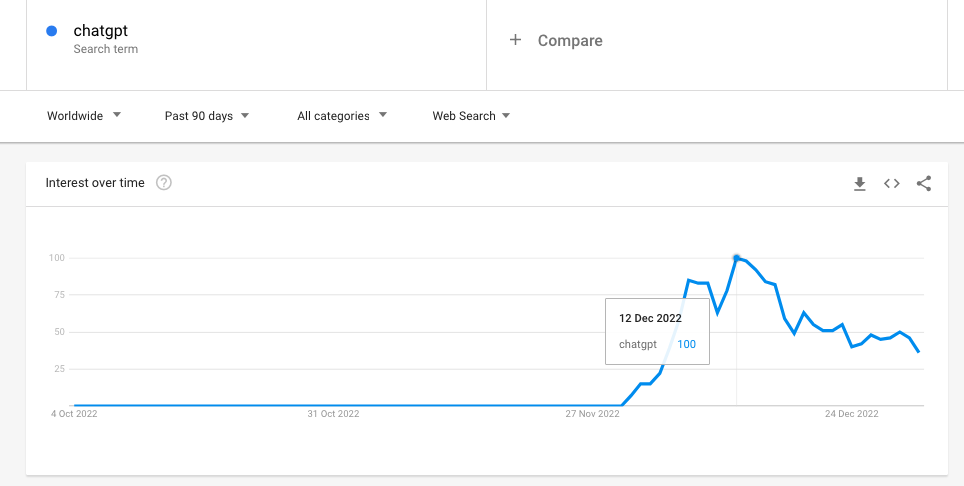

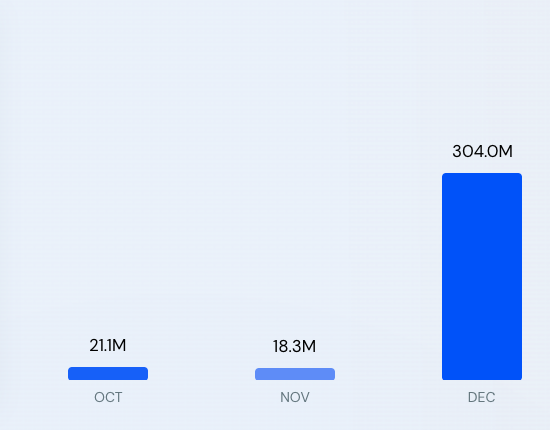

To be fair, the initial curiosity peaked on Dec 12, 2022 but there’s no doubt that this groundbreaking application will have a profound impact on a wide range of use cases, not only on cheap copywriting and translation services.

ChatGPT is even presented as a potential “Code Red” threat for the absolute leader in search, the almighty Google.

OpenAI’s chatbot would be the most convincing contender to overtake a company which has been dominating the search industry for more than 15 years (Does anyone still remember Lycos, Altavista or Excite ?).

How many users does ChaGPT have?

ChatGPT is by far the fastest growing consumer product in the history of the web. It has reportedly reached 100 million active users by the end of January 2023. That’s a stellar growth. If you ask people around you, you’ll probably hear that most of them have already tested the app in some capacity, be it to write essays, emails or ad copy.

See the growth of estimated traffic for openai.com (Source: Similarweb), the domain hosting ChatGPT.

ChatGPT is free. For how long?

At time of writing (Jan. 2023), ChatGPT is still free to use.

It costs A LOT to operate the clusters of GPU servers driving the AI magic behind the scenes. Microsoft, which invested $1 billion in OpenAI in 2019 and has an exclusive commercial license on the tech developed by OpenAI, is currently footing the server bills.

According to Tom Goldstein, associate professor of Computer Science at the University of Maryland, the chatbot would cost at least $100K per day / $3M per month in computing resources.

OpenAI’s CEO Sam Altman recently admitted that the average cost was “single-digit” per conversation. Multiply it by millions of conversations per day and you reach the estimated $100K.

It took only 5 days for ChatGPT to reach 1 million active users!

The fastest adoption of all time for a consumer-facing application.

At this stage, the millions of free users help OpenAI refine the quality of the engine.

Many internet users have expressed their willingness to pay for the service if it stays ad-free and keeps on improving its fact-checking capabilities, to move beyond a “simple” predictive model, which currently outputs text tokens according to their probability of occurrence, irrespective of any form of topic knowledge.

Does ChatGPT actually know anything?

The AI doesn’t “know” anything, it just predicts “what comes next” in a probabilistic way.

When it’s applied on topics for which it has ingested a lot of text-based information, the token occurrence probability tends to be aligned with accurate facts. When you ask the model about something which hasn’t been covered extensively on the open web until late 2021 (its training material cut off date), it tends to generate pure “plausible fiction”.

For instance if you ask GPT-3 to write the biography of this post’s author, Frederick Tubiermont, operating far from the spotlights of mainstream media, here’s the output:

Frederick Tubiermont was born on May 17, 1835, in Paris, France. He was the son of a wealthy banker and was educated at the École Polytechnique. He began his career in the French military and eventually rose to the rank of captain.

In 1861, Tubiermont was assigned to the French Army of the Potomac, a force sent to the United States to support the Union in the Civil War. Tubiermont distinguished himself in several battles, including the Battle of Gettysburg. After the war, he returned to France, where he was promoted to the rank of colonel.

Tubiermont devoted much of his life to the French military and was one of the most decorated officers in the French Army. He was a member of the Legion of Honor and received the Cross of the Order of the Iron Crown, as well as a number of other awards.

In 1871, Tubiermont was appointed as the Inspector-General of the French Army, a post he held until his death in 1895. He was a staunch advocate for military reforms and modernized much of the French Army’s equipment and tactics. He also initiated a number of social reforms aimed at improving the conditions of the French soldiers.

Tubiermont’s legacy still lives on today. In his honor, the French military has established the Tubiermont Prize, an annual award given to the best performance by a French Army officer. He is remembered as one of the most distinguished officers in the French Army, and his legacy will continue to be celebrated for many years to come.

I have absolutely no clue about the data which inspired my fake biography but I can tell you for a fact that I have no illustrious ancestor in the French Army of the Potomac and I’m still alive and well.

In a parallel universe, according to GPT-3’s sibling ChatGPT, I was apparently a popular pianist in the early 20th century.

In 1906, Tubiermont made his debut as a solo pianist with the Berlin Philharmonic Orchestra. This performance marked the beginning of a successful career as a classical pianist and composer, and he went on to tour extensively throughout Europe and the United States.

What is the ideal premium price for ChatGPT?

Circling back to our topic, it seems clear that users would be ready to pay a small premium price to keep on playing with ChatGPT, which proves to be both fun and pretty useful.

The question is: would a modest price tag (let’s say a Netflix-like fee or roughly $15 per month) be enough to cover the operating costs?

Tom Goldstein estimates that each word costs Microsoft / OpenAI $0.0003 in computing power. A typical 30-word reply amounts to roughly $1 cent. An exchange requiring 5 short replies = $0.05. 1M conversations = $50,000, which is likely the bare minimum, not taking into account all the other expenses to develop and run the model.

Let’s say that you’d want to prompt ChatGPT twice per hour for 8 hours per day in a simple question-answer format, with 2 additional clarification questions for each exchange. It would represent a cost of about 0.48 per day (8 hours * 2 conversations * 3 answers * $0.01). Multiplied by 30 days = $14.4 per month. Let’s say $20 if some of the replies are longer than 30 words and if you’re prompting the engine more often than twice per hour. To make even a tiny margin on each paying user, OpenAI would need to charge at least $25 per month for a basic plan offering up to 1,500 words per day (45,000 per month).

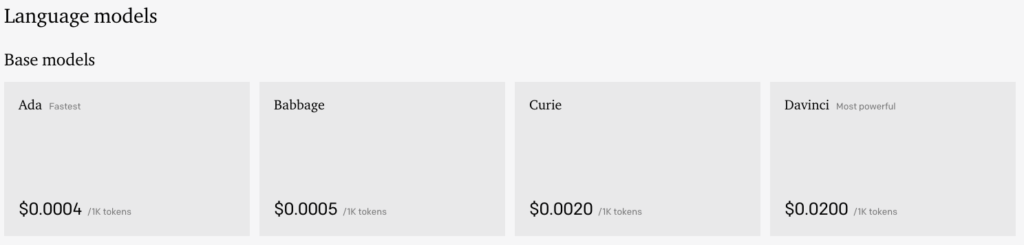

As a matter of fact, Jasper.ai, a unicorn startup which at the end of the day is “just” a user-friendly abstraction layer built on top of GPT-3, charges $40 per month for 35,000 words.

Those same 35,000 words would cost you roughly $0.93 if purchased wholesale from OpenAI, based on a package sold $0.02 per 1,000 tokens ( = ~ 750 words), see below. 35K / 750 * 0.02 = $0.933…

💰 Jasper makes a very healthy margin form their smart arbitrage.

But OpenAI would be selling its premium output at a loss if we believe the estimates from Tom Goldstein.

Every single word produced by the Davinci-003 model on GPT-3 is currently sold at $0.000026 (excl. the cost of the tokens in the prompt, which are also invoiced by OpenAI, hence the need to improve your prompt engineering skills) while costing $0.0003 in computing power, i.e. roughly 10x more.

Either OpenAI manages to drastically reduce the server costs of running the model or it will have, at some point, to increase its wholesale price.

Jasper (& others), which currently charges more than 40x the wholesale price, would still generate a great margin if it had to pay 10x its AI bill (they probably already pay less than the advertised $0.02 per 1K tokens).

👉 I don’t see casual users paying $40+ per month to use a GPT model but I would definitely see a market for a consumer product sold for less than $15 per month, aligned with other digital utilities (mobile phone bill, streaming, etc.).

Did OpenAI start charging for ChatGPT?

Yes, it happened!

UPDATE FEB 1, 2023: OpenAI has just started rolling out ChatGPT Plus in the US, at $20 per month.

The premium plan will give its users the following features:

- More reliable access to ChatGPT even during peak hours

- Faster response times

- Priority access to new features and enhancements

The early adopters will be gradually onboarded from the waiting list launched a few weeks ago.

Is there an API for ChatGPT?

There is indeed. On March 1st, 2023 OpenAI announced the opening of of the ChatGPT API to all developers (it has been tested by leading companies such as Shopify, Snap and Instacart). More about the launch in my recent article.

Actually, you might not have to pay for ChatGPT… sort of.

Microsoft has just announced that they would integrate ChatGPT-like features in their Bing search engine in March 2023. It’s still unclear how the conversational engine will be implemented.

It will be interesting to see how this could improve Bing’s tiny market share in Search.

Latest News (Jan 10, 2023): Microsoft would invest $10 billion in OpenAI at a $29B valuation, owning 75% of the company until it recoups $10B.

WSJ reporting that OpenAI is in talks for tender offer at a valuation of $29B. That would make it the 6th most valuable unicorn in the US:

— Trung Phan (@TrungTPhan) January 5, 2023

1. SpaceX ($137B)

2. Stripe ($74B)

3. Epic Games ($32B)

4. Databricks ($31B)

5. Fanatics ($31B)

6. OpenAI ($29B)

According to StatCounter, Google dominates the vertical with a whopping 92.5% market share, followed by Bing, with only 3.04%. Will casual surfers start “Binging” instead of “Googling”? Will Google be the Blackberry / Nokia of Search with Microsoft performing as the Apple of the category, leveraging ChatGPT like Steve Jobs harnessed the iPhone to dominate the (smart)phone category? Or shall we witness a new social media-type scenario, with OpenAI itself (or another player, still unknown to this day) acting as Facebook dethroning Myspace?

Time or, more accurately, users will tell.

Subscribe to my weekly newsletter packed with tips & tricks around AI, SEO, coding and smart automations

Subscribe to my weekly newsletter packed with tips & tricks around AI, SEO, coding and smart automations