I wrote this article back in 2019. The topic is now outdated since Amazon closed its Alexa service in May 2022. I invite you to use Similarweb, ahrefs, SEMRush, SERanking, SERPStat and the likes to evaluate the organic traffic of any website. If you don’t know how to use those tools, don’t hesitate to contact me, I’ll be happy to help.

Here’s the archive of the article written in 2019. Time’s flying so fast!

👉 The article still contains some interesting / valid points related to the way you can automate API calls via Make / Integromat.

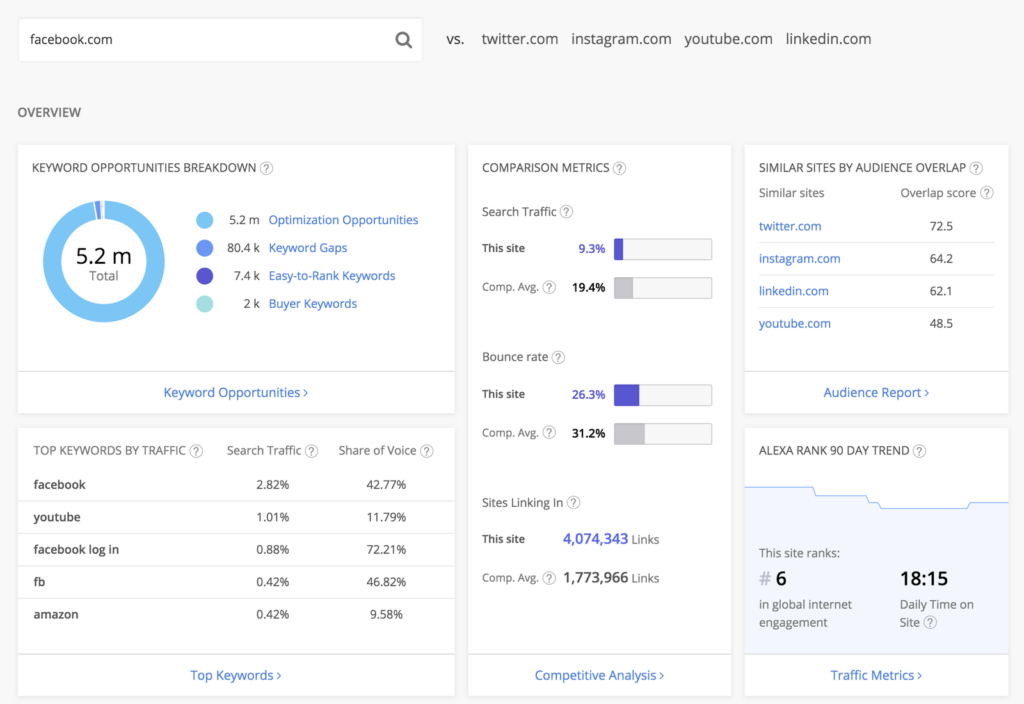

It’s important to know where you stand vs your competitors. A quick way to assess the popularity of a website is to check its Alexa rank.

You can do it manually by appending the URL of the website to http://alexa.com/siteinfo/

For example, here’s the result for Facebook.com via the query http://alexa.com/siteinfo/facebook.com

You can also find a list of the 500 most popular websites on https://www.alexa.com/topsites

Bulk query to get the Alexa rank of 150 websites

But imagine you have a list of 150 websites you want to research. It would take you at least 1 hour to query 150 URLs and compile the results.

Wouldn’t it be nice to get these results in a few seconds? With a bonus: the estimate of the daily traffic for each of those websites.

Using MyIP.ms API + Integromat + Google Sheet to retrieve the Alexa rank & amount of daily visitors

I’ve already posted a few articles about MyIP.ms, a great tool if you want, for instance, to find the most popular Shopify websites.

If you sign up for a free account, you get 150 API credits, which you can use to automate your research process.

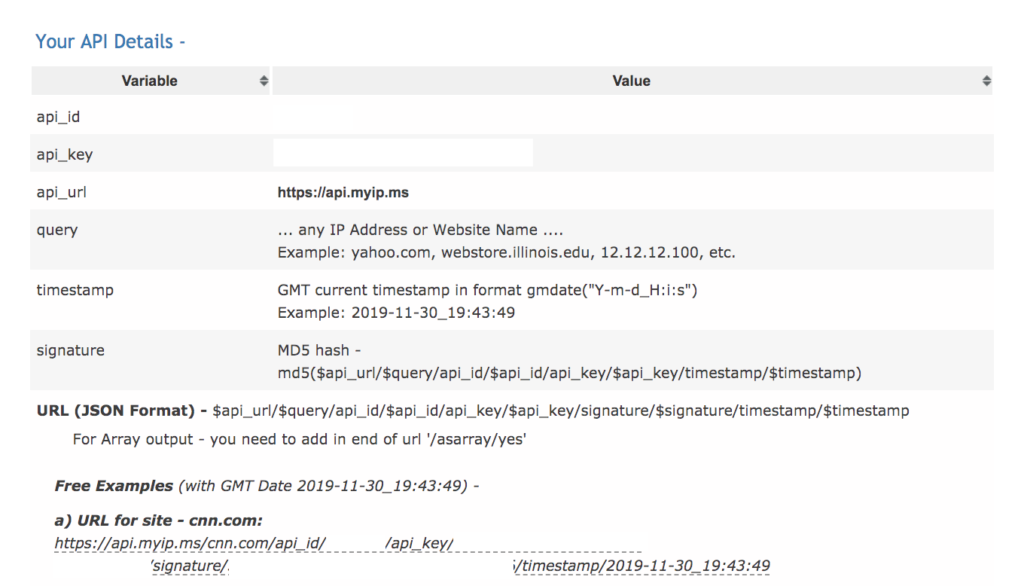

The API dashboard gives you an api_id and an api_key (I’ve masked them on my screenshot). You have to follow the instructions to build your API call.

The trickiest parts are the md5 hash for the signature and the format of the timestamp (24h GMT).

It’s crucial to respect the structure of the API call. Otherwise, you’ll get an error instead of the expected data.

If you’re not familiar with integromat, let’s say that it’s an advanced form of Zapier / IFTTT, which displays your automation rules in a visual format.

I’m a huge fan of automations built upon Google Sheets.

Google Sheet will be the starting & end point of this case study.

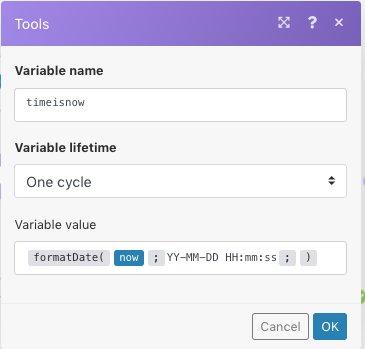

The integromat scenario will watch for new rows in my sheet (each new row being a separate URL), then it will set a timestamp, which I can use in my API call to myip.ms.

I use a Set Timestamp module for 2 reasons:

1° the timestamp has to be exactly the same both in the md5 for the signature and at the end of the API call (that’s how they check the validity of the API call).

2° it has to be presented in GMT 24h format. I can easily achieve this with the data formatter provided by integromat. Please note that HH for hours will set the format to 24h (instead of AM/PM).

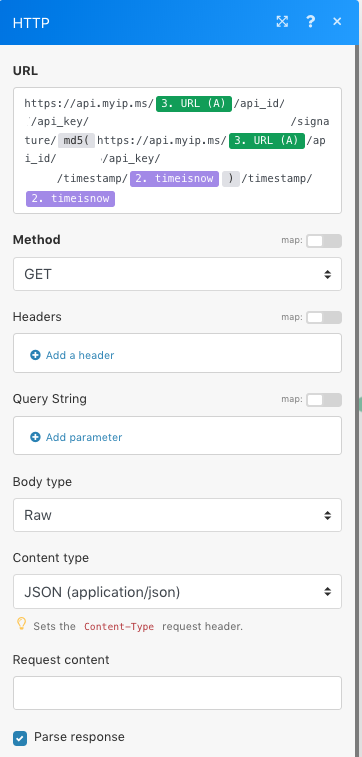

After setting the timestamp (the variable will be called timeisnow), I can build the API call (I’ve masked the sensitive data).

You can see how I’m using a reference from the Google spreadsheet to determine the URL query.

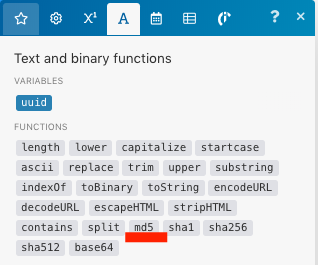

It will pull the data from each new cell in column A. I’m using the timestamp value (timeisnow) both in the md5 source and at the end of the query. The md5 will be created on the fly by integromat (md5( ) is a function you’ll find in the integromat builder, see below).

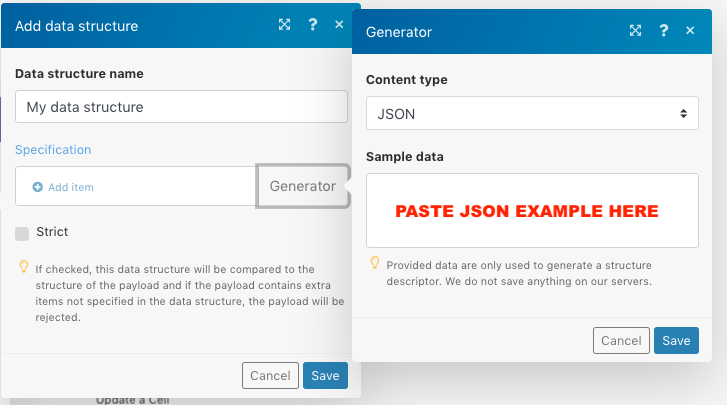

The next module, Parse JSON, will present the JSON data received from myip.ms in an usable format.

You have to paste into the “sample data” field of the JSON generator an example of the data received via the API, which you can easily find by using one of the URLs given by myip.ms as examples on their API dashboard.

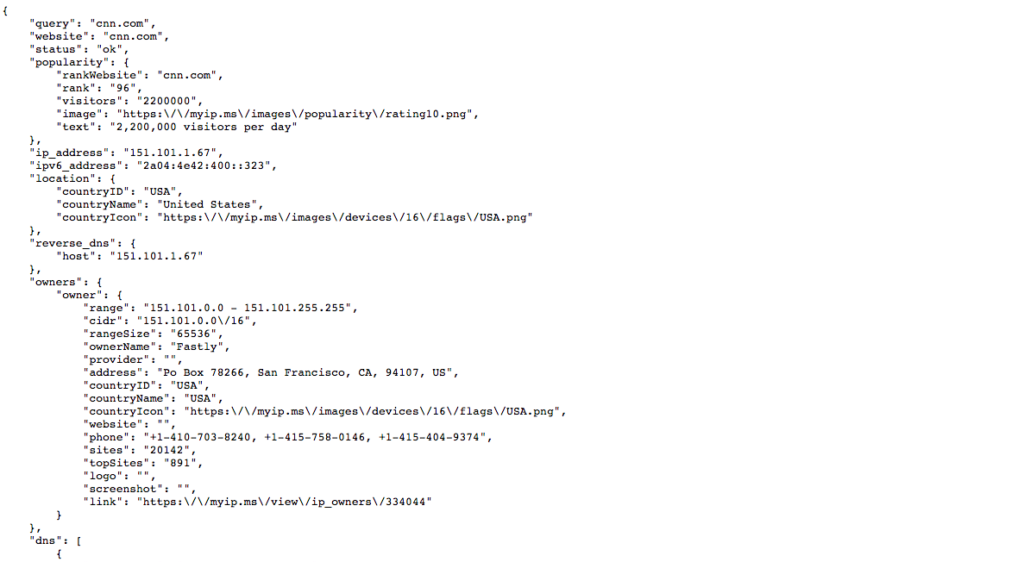

It will give you something like this (I’ve cut the screenshot below but copy-paste the full JSON content based on what you’ll get in your browser using one of the examples shared on https://myip.ms/info/api/API_Dashboard.html).

You can see that the data we’ll be using is a the top of this JSON file (rank and visitors under popularity).

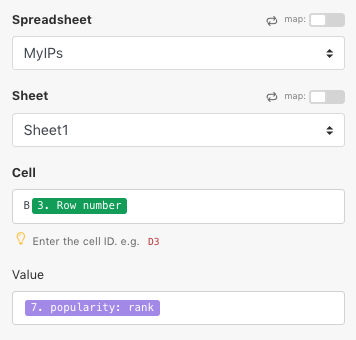

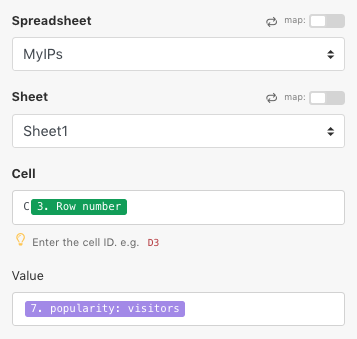

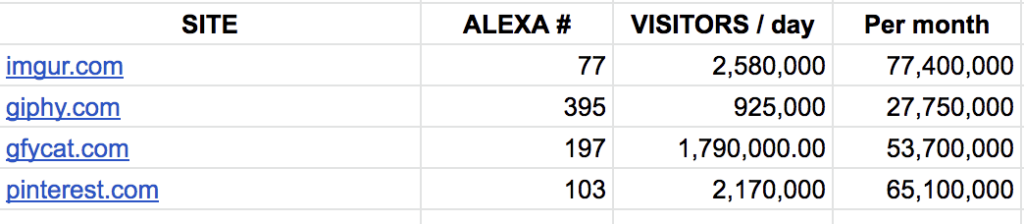

The last 2 steps will be to update the cells in columns B and C in my spreadsheet, for each processed row. Column B will be the Alexa rank and column C the amount of daily visitors.

FYI, the last column is just a simple formula ( = visitors per day * 30).

I run the scenario and, after a few seconds, here’s the result.

You can do this free of charge for 150 URLs per month!

That’s a pretty convenient way to quickly find out the Alexa rank and daily traffic for 150 websites!

I hope you’ll find this tip useful in your SEO research.

If you have any questions, feel free to share it in the comments section.

Subscribe to my weekly newsletter packed with tips & tricks around AI, SEO, coding and smart automations

Subscribe to my weekly newsletter packed with tips & tricks around AI, SEO, coding and smart automations